Checkout New! KiviCare - Encounter Body Chart (Add-on)

Checkout New Add-on!

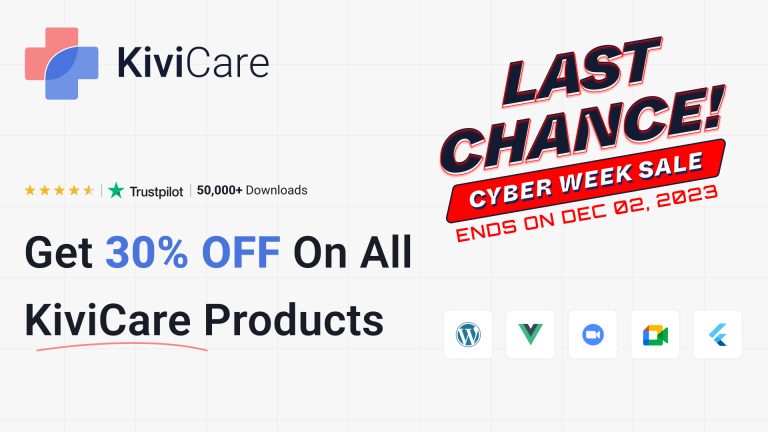

Streamline Your Clinic with KiviCare’s Dynamic Dashboard – Cyber Week Savings Await!

Clinics are crucial in our healthcare system, providing essential medical services to communities. However, managing the daily operations and ensuring top-notch patient care can be a daunting challenge. Overbooking, long waiting times, and billing issues are just a few obstacles…

Patient-Centric Care: How KiviCare Enhances the Patient Experience

Patient-centric care isn’t merely a buzzword; it’s the beating heart of the modern healthcare industry. It’s a paradigm shift from the age-old provider-centric model to one where the patient is truly at the core. Enter KiviCare- A complete clinic management…

Managing Multiple Clinics Made Easy: Exploring KiviCare’s Multi-Clinic Capabilities

Managing multiple clinics in the healthcare sector can be an intricate juggling act. Healthcare professionals who oversee more than one clinic often find themselves entangled in a web of complexities. The demands of patient care, resource allocation, and coordination can…

Creating a Profitable Online Presence with KiviCare’s WooCommerce Integration

In today’s digital age, establishing a robust online presence is not just a trend; it’s a strategic necessity for healthcare practices. The importance of accessibility and convenience in healthcare services cannot be overstated. This is where KiviCare’s WooCommerce integration steps…

Efficient Patient Data Management with KiviCare’s EHR System

Effective patient data management is the backbone of quality healthcare. It ensures that healthcare providers have timely access to accurate and comprehensive patient information, enabling them to deliver better care. In the digital age, KiviCare’s Electronic Health Record (EHR) system…

KiviCare’s Dynamic Dashboard: Real-Time Insights for Clinic Performance

In the fast-paced world of healthcare, managing a clinic efficiently is no small feat. The key to success lies in having the right tools, and that’s where KiviCare steps in. KiviCare offers a dynamic dashboard that can make a difference…

Regenerative Medicine – Your Futuristic Prescription for Healing from Within!

Ladies and gentlemen, welcome to the glamorous world of regenerative medicine, where healing becomes the trendiest accessory of the future! Get ready to don your tech-savvy stilettos and embark on a journey through the realm of regenerative wonders. From groundbreaking…

The Role of Social Media in Shaping the Healthcare Industry & Sparking Conversations!

Welcome to the wild and wonderful world of social media, where the healthcare industry roars to life with tech innovation and futuristic flair! Step into our virtual zoo of social media, where tech wizardry and healthcare conversations intertwine in an…

The Impact of Nutrition on Mental Health – The Gut-Brain Connection

Get ready to explore the intriguing world of the gut-brain connection and how nutrition shapes our state of mind. From techy neurons to future-forward dietary discoveries, we’ll uncover the dance between the gut and brain that impacts our mental well-being.…

Unleash the Software Power of Practice Prosperity with KiviCare!

Welcome to the fabulous game of KiviCare’s website wizardry, where practice prosperity becomes a reality! Prepare to be spellbound as we unveil the secrets behind our enchanting website features that will take your practice to new heights. In this blog,…